Predictive analytics provides foresight across healthcare, finance, marketing and more. But as businesses increasingly rely on algorithms that predict human responses — for example, how customers will react to a new product ethical issues of bias, privacy and transparency have moved to the forefront.

And, with sales and business intelligence trends increasingly driven by AI, data analytics and automation, you might not get away with simply wishing you are ethically making predictions, but that you actually are.

What is Predictive Analytics?

Predictive analytics is the process of analyzing existing datasets to make predictions. Companies use it to predict customer behavior, evaluate risk and monitor operations.

But without ethical guardrails, predictive models can legitimately bolster inequality in unintentional ways, they can violate personal privacy, or they can serve as “black boxes” — decisions taken and that people can’t understand or challenge.

Why Ethics Matter in Predictive Analytics

Ethical predictive analytics ensures that models:

- Treat individuals fairly

- Respect user privacy

- Provide transparency and accountability

- Comply with regulations like GDPR or CCPA

- Earn and maintain consumer trust

Unethical practices can lead to reputational damage, regulatory penalties, and public backlash—undermining the very advantages predictive analytics offers.

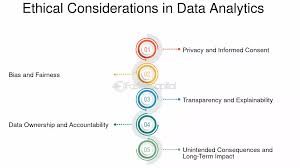

Bias in Predictive Analytics

Types of Bias

- Historical Bias: Rooted in past data that reflects social inequalities (e.g., biased hiring practices).

- Sampling Bias: When the training data isn’t representative of the broader population.

- Algorithmic Bias: Arising from how the model processes and weights data.

- Label Bias: Occurs when labels used in supervised learning are themselves biased.

Causes and Examples

- A credit scoring system penalizes certain zip codes because of past default rates, disproportionately affecting minority communities.

- An HR tool that screens resumes prioritizes male candidates because historical hiring data favored men.

Mitigation Strategies

- Bias Audits: Regularly test for disparate impacts.

- Diverse Data: Train on inclusive and representative datasets.

- Fairness-Aware Algorithms: Use algorithms designed to correct imbalances.

- Cross-Functional Teams: Involve ethicists, domain experts, and diverse stakeholders in model design.

Data Privacy in Predictive Analytics

Data Collection Ethics

Organizations must define clear, ethical policies around data collection. Gathering data without explicit user consent or beyond the stated purpose constitutes a breach of privacy.

Consent and Data Ownership

- Users should own their data and be allowed to access, correct, or delete it.

- Use informed consent: Explain how the data will be used, stored, and shared.

Legal and Compliance Frameworks

- GDPR (EU): Requires transparency, user consent, and the right to be forgotten.

- CCPA (California): Gives consumers the right to know what data is collected and to opt out.

- HIPAA (US Healthcare): Enforces strict privacy and security rules for health data.

Non-compliance can result in fines of millions of dollars and damage public trust.

Transparency and Accountability

Explainability in Algorithms

“Black-box” models can make it impossible for users or regulators to understand how decisions are made.

- Use explainable AI (XAI) techniques like LIME or SHAP to interpret model outcomes.

- Prefer interpretable models in high-stakes decisions (e.g., lending, healthcare).

Governance and Oversight

- Create Ethics Committees or AI Review Boards.

- Document decision-making processes and maintain audit trails.

- Set up clear accountability chains: Who is responsible when something goes wrong?

Communicating with Stakeholders

- Offer clear, jargon-free explanations of how models work and why decisions were made.

- Empower users to contest or appeal algorithmic decisions.

The Future of Sales: Why Ethical Predictive Analytics Matters

Predictive analytics is transforming the sales process from lead scoring and customer segmentation to personalized marketing campaigns and churn prediction. However, ethical lapses can backfire:

- Biased algorithms might target the wrong audience or exclude valuable customers.

- Invasive data use can alienate users and reduce brand loyalty.

- Opaque decisions hinder trust in automation tools used by sales teams.

As AI, data analytics, and automation shape the future of sales, organizations must align their predictive systems with ethical best practices to ensure long-term value and trust.

Best Practices for Ethical Predictive Analytics

- Perform Bias Testing Regularly

- Ensure Transparent Documentation

- Establish Data Governance Policies

- Train Teams in Ethics and Compliance

- Prioritize Consent and Data Minimization

- Leverage Explainable AI Models

- Engage Cross-Functional Ethics Teams

- Continuously Monitor and Audit Models

These practices not only reduce legal risks but also foster responsible innovation and long-term stakeholder trust.

Business imperatives Many businesses have found that ethical considerations in predictive analytics -bias, privacy, transparency- are not merely academic. The more AI, data analytics and automation come into play, the greater the responsibility we all must have to make sure these tools serve everyone equally.

Ethical considerations incorporated into model development allow organizations to maximize the value of predictive analytics while adhering with rules of human right, legal bounds, and societal norms. In the end, ethical predictive analytics is not only the right thing to do, it just makes good business sense.

Frequently Asked Questions (FAQ)

1. What are the main ethical concerns in predictive analytics?

The primary concerns are bias in decision-making, data privacy violations, and the lack of transparency in how models operate and make predictions.

2. How can predictive analytics be biased?

Bias can result from unbalanced data, flawed assumptions in algorithms, or historical inequalities embedded in the dataset, leading to unfair or discriminatory outcomes.

3. What laws govern data privacy in predictive analytics?

Major laws include the General Data Protection Regulation (GDPR) in Europe, the California Consumer Privacy Act (CCPA) in the U.S., and sector-specific laws like HIPAA in healthcare.

4. Why is transparency important in AI models?

Transparency ensures that decisions can be understood, challenged, and improved. It builds trust with users, helps meet regulatory requirements, and reduces the risk of unintended consequences.

5. How can businesses ensure ethical use of predictive analytics?

By conducting bias audits, using explainable models, obtaining informed consent, and establishing clear governance and accountability mechanisms.